Maciej Mazur

on 25 February 2021

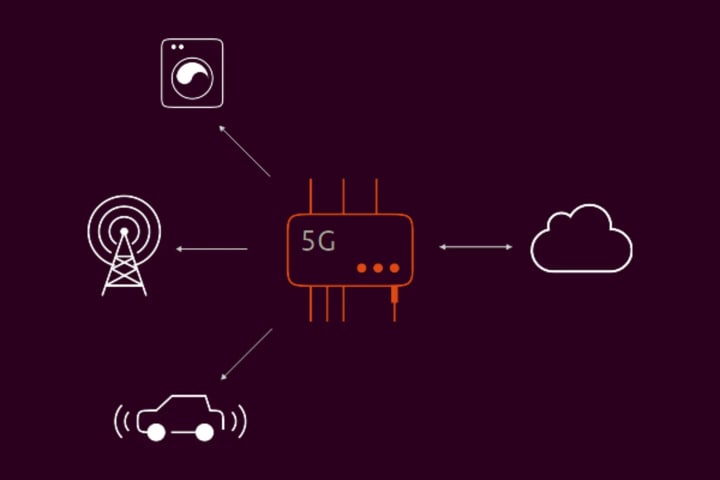

MEC, as ETSI defines it, stands for Multi-access Edge Computing and is sometimes referred to as Mobile edge computing. MEC is a solution that gives content providers and software developers cloud-computing capabilities which are close to the end users. This micro cloud deployed in the edge of mobile operators’ networks has ultra low latency and high bandwidth which enables new types of applications and business use cases. On top of that an application running on MEC can have real-time access to a subset of radio network information that can improve the overall experience.

MEC Use Cases

MEC opens a completely new ecosystem where a mobile network operator becomes a cloud provider, just like big hyperscalers. Its unique capabilities enabled by access to telecom specific data points and a location close to the user gives mobile network operators (MNOs) a huge advantage. From a workload perspective, we can distinguish 4 main groups of use-cases.

Services based on user location

Services based on user location utilize the location capabilities of the mobile network from functions like LMF. Location capabilities are more and more precise every standard and 5G networks aim for sub meter accuracy in sub 100ms intervals. This allows an application to track location, even for fast moving objects like drones or connected vehicles. Other simpler use cases exist: for example, if you want to make an user engagement app on a football stadium, you can now coordinate your team fans for more immersive events. And if you need to control movement of a swarm of drones, you can just deploy your command and control (C&C) server on the edge.

IoT services

IoT services are another big group. The number of connected devices grows exponentially every year. They produce unimaginable amounts of data. Yet it makes no sense to transfer each and every data point to the public cloud. In order to save some bandwidth, ML models running at the edge can aggregate the data and perform simple calculations to help with the decision making. The same goes for IoT software management, security updates, and devices fleet control. All of these use-cases make much more economical sense if they are deployed on small clouds at the edge of the network. If that is something that interests you, you can find more details on making a secure and manageable IoT device with Ubuntu Core here.

CDN and data caching

CDN and data caching are using edge to store content as close as possible to a requesting client machine, thereby reducing latency and improving page load time, video quality, and gaming experience. The main benefit of a cloud over legacy CDN is the fact that you can analyze the traffic locally and make better decisions on which content to store, and in what type of memory. A great and open source way to manage your edge storage and serve it in S3 compatible way is CEPH.

GPU intensive services

GPU intensive services such as AI/ML, AR/VR, and video analytics are all relying on the computing power available to the mobile equipment user with low latency. Any device can benefit from a powerful GPU located on an edge micro cloud, making access to ML algorithms, API for video analytics, or augmented reality based services much easier. These capabilities are also revolutionizing the mobile gaming industry, giving gamers more immersive experience and low enough latency to compete on a professional level. Together with NVidia , Canonical partnered to create a dedicated GPU accelerated edge server

MEC infrastructure requirements

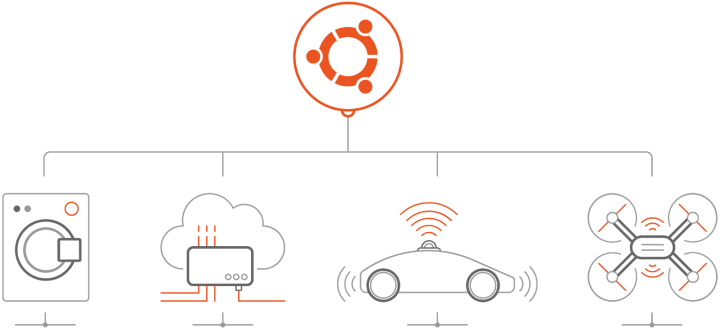

In order to have MEC you need some infrastructure at the edge, including computers, storage, network, and accelerators. In telecom cases, accelerators like DPDK, SR-IOV or Numa are very important as they allow us to achieve the required performance of the whole solution. One bare metal machine is not enough, and two servers are just a single one and a spare. With 3 servers, which would be a minimum for an edge site, we have a cloud, albeit a small one. At Canonical they are called micro clouds, a new class of compute for the edge, made of resilient, self-healing and opinionated technologies reusing proven cloud primitives.

To choose a proper micro cloud setup, you need to know the workload that would be running on it. As the business need is to expose edge sites to a huge market of software developers it needs to be something familiar and liked by them. That’s why you don’t deploy OpenStack on the edge site. What you need is a Kubernetes cluster. The best case scenario for your operations team would be to have the same tools to manage, deploy, and upgrade such an edge site as tools they have in the main data center.

Typical edge site design

Canonical provides all the elements necessary to build an edge stack, top to bottom. There is MAAS to manage bare metal hardware, LXD clustering to provide an abstract layer of virtualization, Ceph for distributed storege, and MicroK8s to provide a Kubernetes cluster. All of these projects are modular, open source, and you can set it up yourself, or reach out to discuss more supported options.

Obviously, edge is not a single site. Managing many micro clouds efficiently is a crucial task in front of mobile operators. I would suggest using an orchestration solution directly communicating to your MAAS instances, without any intermediate “big MAAS” as the middle man. The only thing you need is a service that returns each site name, location and network address. In order to simplify edge management even further all open source components managed by Canonical use semantic channels. You might be familiar with it already, as you use it when you install software on your local desktop using:

sudo snap install vlc –channel=3.0/stable/fix-playback

A channel is a combination of <track>/<risk>/<branch> and epochs to create a communication protocol between software developers and users. You will find the same concept used in charms, snaps and LTS Docker images.

You now know what is MEC and what is its underlying infrastructure. I encourage you to try it out yourself and share your story with us on Twitter. This is a great skill to have, as all major analyst companies are now agreeing with the fact that edge micro clouds will take over the public cloud and will be the next big environment for which all of us will write software in the future.